Stanford officials’ blaming of the algorithm glossed over the fact that the system was simply following a set of rules put in place by people. Algorithm designers worry that casting blame on what in fact are mathematical formulas created by human beings will feed public distrust of the vaccines’ rollout.

The real problem, they argue, is arbitrary and opaque decision-making that doesn’t engage with the people who would be most affected by the decisions. If how the algorithm worked had been discussed more transparently beforehand, they argue, medical professionals would have been more confident in the results – or even, perhaps, spotted the oversight in advance.

Students, teachers and parents last week protested after their A-level results were downgraded by by a government algorithm.Credit:Getty Images

Algorithms, they argue, could play an important role in quickly and equitably helping distribute limited supplies of the vaccine to the people who would need it most. Unlike their human designers, algorithms can’t be lobbied, intimidated, browbeaten or bribed, and they apply the same rules to every participant, unbiased by any personal relationship or grudge.

But with limited public-health guidance and so many factors at play, the algorithms could also end up being blamed when things go south. Their application to potentially life-altering medicine only serves to raise the stakes.

“On this huge global scale, the answer can’t be that people just operate off their own gut instincts and judgment calls,” said Cat Hicks, a senior research scientist who writes algorithms and builds predictive models at the education nonprofit Khan Academy.

This data “gives us the chance to agree on something, to define a standard of evidence and say this matched what we all agreed to,” she added. “But you can’t cut humans out of the process. There needs to be testing, verifying, trust-building so we have more transparency . . . and can think about: Which people might get left out?”

Algorithms are key to search results. Credit:AP

These clashes over vaccine transparency could play a growing role as supplies move from hospitals to private employers – and as people itch to resume their normal lives. A Centres for Disease Control and Prevention advisory panel last week recommended that seniors and workers in essential industries – including police officers, teachers and grocery-store workers – be given access to vaccines first after health care needs are met.

But algorithms are already playing a key role in deciding vaccine deployments. Some of the first people to be vaccinated in the US, earlier this month at George Washington University Hospital, were selected by an algorithm that scored their age, medical conditions and infection risk, a federal official told the New York Times.

Algorithms are also guiding the federal government’s mass distribution of the vaccine nationwide. The Trump administration’s Operation Warp Speed is using Tiberius, a system developed by the data-mining company Palantir, to analyse population and public-health data and determine the size and priority of vaccine shipments to individual states. The effort has already been marred by supply miscommunications and shipment delays.

The Stanford “vaccination sequence score” algorithm used a handful of limited variables – including an employee’s age and the estimated prevalence for COVID-19 infection by their job role – to score each employee and establish their place in line. People aged 65 or older, or 25 or younger, got a bonus of 0.5 points.

But the issue, some algorithmic designers said, was in how the different scores were calculated. A 65-year-old employee working from home would get 1.15 points added to their score – based purely on their age. But an employee who worked in a job where half the employees tested positive for the coronavirus would get only 0.5 additional points.

That scoring-system flaw, among others, left medical residents confused and frustrated when they saw that high-level administrators and doctors working from home were being vaccinated before medical professionals in patient rooms every day. Only seven of the hospital’s resident physicians were among the first 5000 in line.

Unlike more sophisticated algorithms, the Stanford score does not appear to have added additional “weight” to more important factors, which could have prevented any data point, like age, from overly skewing the results. Further compounding the problem: Some residents told ProPublica that they were disadvantaged because they worked in multiple areas across the hospital and could not designate a single department, which lowered their score.

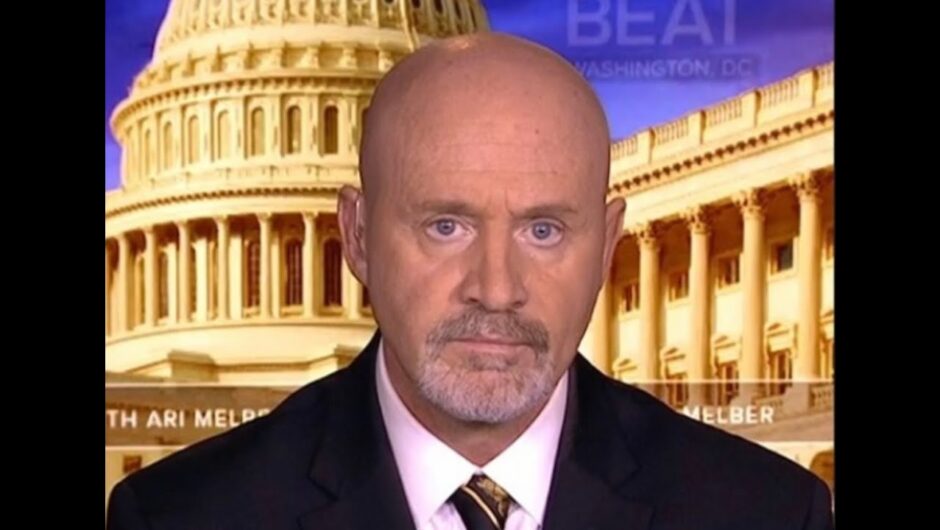

When dozens of residents and others flooded the medical centre in protest, a Stanford director defended the hospital by saying ethicists and infectious-disease experts had worked for weeks to prepare a fair system but that their “very complex algorithm clearly didn’t work.” In a video of the scene, some protesters can be heard saying, “F— the algorithm” and “Algorithms suck.”

Loading

In an email to pediatrics residents and fellows obtained by The Washington Post, officials said “the Stanford vaccine algorithm failed to prioritise house staff,” such as young doctors and medical-school residents, and that “this should never have happened nor unfolded the way it did.”

Stanford Medicine has since said in a statement that it takes “complete responsibility” for errors in the vaccine distribution plan and that it would move to quickly provide vaccinations for its front-line workforce.

(Stanford is not alone in facing blowback: At Barnes Jewish Hospital in St. Louis, front-line nurses have launched a petition criticising the hospital’s decision to prioritise workers by age, rather than exposure risk. Hospital administrators have said they’re just following federal guidelines.)

An algorithm is a fancy word for a simple set of rules: It’s a formula, like a recipe, that uses data or other ingredients to produce a predictable result. For many, the term evokes the kind of machine-learning software used to translate languages, drive cars or identify faces in images without human input.

These algorithms can refine how they work over time using a basic form of artificial intelligence. We interact with them every day, because they decide the order of the search results and social media posts we see online.

But the algorithms make complex decisions without explaining to people exactly how they got there – and that “black box” can be unnerving to people who may question the end result. Problems with how the algorithms were designed or with the data they’re fed can end up distorting the result, with disastrous consequences – like the racial biases, inaccuracies and false arrests of facial recognition systems used by police.

To the algorithms, it’s all just math – which, as computer tools, they can do exceptionally well. But they all too often get the blame for what are, in essence, human problems. People write the code in algorithms. They build the databases used to “train” the algorithms to perform. And in cases like Stanford’s, they overlook or ignore the potential weaknesses that could critically undermine the algorithms’ results.

Most data scientists and AI researchers say the only solution is more transparency: If people can see how the mix of algorithms, data and computing power is designed, they can more reliably catch errors before things go wrong.

But because the companies that design algorithms want to protect their proprietary work, or because the systems are too complicated to easily outline, such transparency is quite rare. People whose lives depend on the algorithm’s conclusions are asked to simply trust that the system, however it works, is working as intended.

Critics have worried that the use of algorithms for health-care decision-making could lead to dangerous results.

Researchers last year found that a medical algorithm by the health-care company Optum had inadvertently disadvantaged black patients by ranking them lower in need for additional care compared to white patients, according to the journal Science. A spokesman for the company told The Washington Post then that the “predictive algorithms that power these tools should be continually reviewed and refined.”

And this summer, students in the United Kingdom took to the streets to protest another algorithm that had been used to rank them for university placement. Because tests were disrupted during the pandemic, the country’s exam regulator automatically ranked students based on past grades and school performance – and, in the process, inadvertently boosted students at elite schools while penalising those with less privileged backgrounds. The British government reversed course shortly after.

“If we lived in a thriving, economically just society, people might trust algorithms. But we (mostly) don’t,” wrote Jack Clark, the policy director for the research lab OpenAI, in a newsletter last week. “We live in societies which are using opaque systems to make determinations that affect the lives of people, which seems increasingly unfair to most.”

The Stanford backlash, he added, is just “a harbinger of things to come.”

Washington Post

Most Viewed in World

Loading