Artificial intelligence experts have warned that as facial recognition technology continues to advance, minorities will face further targeting and persecution without proper safeguards in place.

The Washington Post recently revealed Chinese telecommunciations giant Huawei worked on a facial recognition system that monitors and tracks China’s Uighur minority. The report refers to documents posted publicly to Huawei’s website.

Huawei, together with Chinese artificial intelligence firm Megvii, tested the software on the company’s video cloud infrastructure to see if Huawei’s hardware was compatible with Megvii’s facial recognition software.

The trial reportedly tested a “Uighur alert” feature that could determine an individual’s “ethnicity” and forward those details on to Chinese authorities.

The ethnic minority Uighurs are a repressed Muslim group that has faced ongoing targeting by the Chinese government.

China has been accused of forcing more than one million Uighurs and other mostly Muslim minorities into re-education camps in its remote Xinjiang region – assertions Beijing has continually denied.

How accurate would the technology be?

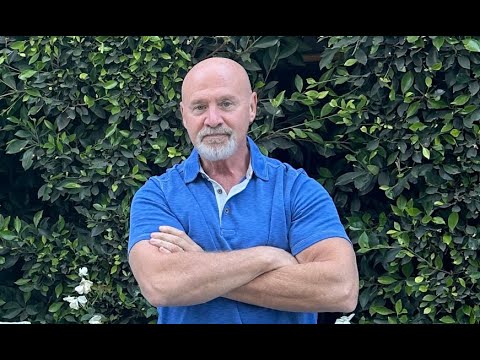

Professor Toby Walsh, a leading researcher in artificial intelligence at UNSW, said such technology would be of “variable” quality, especially as it’s not being used in a carefully-controlled environment.

“It’s very variable,” he told SBS News.

“It depends on the conditions in which we’re used to seeing face recognition. When you have multiple scans at the airport, and it recognises your face – that’s a very controlled environment. But equally, what happens if you put it out in the wild is much less certain.”

He noted there are examples around the world where facial recognition technology has been used by law enforcement and overwhelmingly failed to achieve its purpose with accuracy.

In 2018, for example, it was reported British police tested similar software and only had a two per cent success rate with identifying the right people when it was used at large events.

Fears AI could further target persecuted minorities

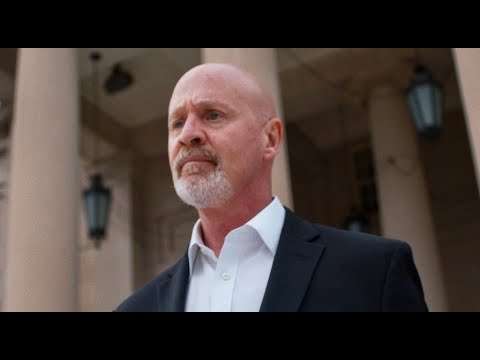

But Lucie Krahulcova, a digital rights advocate who works for Digital Rights Watch, noted that while facial recognition technology still struggles more to identify people of colour and other minorities, it’s catching up fast.

“It’s going to get trained across more minority faces. It’s an unpleasant circle,” she told SBS News.

“The development of the technologies – they didn’t develop it to target minorities. But unless there are safeguards in place, it’s just going to get better at targeting them.”

Ms Krahulcova said one of the biggest issues, on a global level, is that the technology is sold as an essential tool in catching terrorists and other hardline criminals, but can then be used to invade one’s privacy and – in extreme cases – actively target minorities.

“The biggest issue is that the technology is often sold as an essential tool for law enforcement, when really what you’re seeing play out in China is the worst-case scenario, where it’s used not to catch criminals,” she said.

“That’s the selling point in a lot of democratic countries – that this technology will be used to catch criminals. But realistically, you’re not passing any safeguards.

‘So what we’re seeing in China is the worst-case scenario where that technology is being employed, explicitly, against a certain demographic.”

She warned that as the technology improved, persecuted minorities around the world would be further targeted.

The Fundamental Rights Agency released a report on Monday arguing people need stronger protection from the effects of artificial intelligence.

Much of the attention on developments in AI “focuses on its potential to support economic growth”, the report said.

But it added: “How different technologies can affect fundamental rights has received less attention.”

“Technology moves quicker than the law,” FRA director Michael O’Flaherty said in the report.

“We need to seize the chance now to ensure that the future EU regulatory framework for AI is firmly grounded in respect for human and fundamental rights.”

More research funding was needed into the “potentially discriminatory effects of AI”, the agency added.

“Any future AI legislation has to consider” possible discriminatory effects and impediments to justice “and create effective safeguards”, the agency said in a statement accompanying the report.

‘We’re setting ourselves up to be hypocrites’

Ms Krahulcova said the international community must “absolutely” condemn China over its use of artificial intelligence to target minorities.

A key problem here, however, is that other western governments are embracing these technologies themselves for everyday use.

“What’s most concerning is that these technologies get passed into existence, created to fight some massive crime, like terrorism … but then they’re used in everyday operations,” she said.

“If you were going to use this sort of technology to police everyday crimes, you’d have a very different debate in Parliament, which is why it gets attached to something horrendous, like terrorism – that’s the language Home Affairs in Australia uses.

“I think the biggest concern is it gets rushed out, because there’s this urgent, hypothetical thing. And then it’s being used excessively, to police all sorts of things. And there’s not enough safeguards or accountability built into the system for those everyday uses.”

Ms Krahulcova said countries like Australia need to “take a hard look at the precedent we’re setting”, and ask whether we’re potentially setting ourselves up to be hypocrites in condemning other countries.

How Australia is leading the push

That said, Professor Walsh noted that Australia is in some ways leading the global conversation around regulating artificial intelligence tools.

“We definitely have to regulate. I think that’s one of the things we’ve been realising over the last couple of years – is that it’s entirely possible for regulate the space. Look at Europe with the General Data Protection Regulation.”

The General Data Protection Regulation, or GDPR, is a regulation that demands businesses protect the personal data and privacy of citizens in the European Union, for transactions within EU member states.

But Professor Walsh says Australia is also a global leader in regulating the digital space.

“We’ve already discovered that here with Australia – we’re the second country on the planet to have a Google tax. And look at our response to what happened to Christchurch – which is that we will hold you accountable for offensive content that it corrupting and causing terrorist acts to be carried out.”

He also referenced the “tech tax” – Australia’s proposal to divert profits from Facebook and Google using competition law to support journalism – which is now being considered in Europe.

“The UK is now having the same debate. Often in small countries like here, we can be a leader for what happens overseas as well.”

“If you work with any large Chinese corporation, you’re essentially working with the Chinese state,” he added.

“Your boundaries between where the state ends and those corporations begin is very fuzzy – much more so than if it was Apple, which has worked very hard to protect people’s privacy. That’s not going to be true of a company like Huawei.

“Right at the top of government these days, they’re thinking about sovereignty and critical technologies where we need to maintain sovereign capability.

“Otherwise, we’re going to find all our switching gear is Huawei’s, and who knows what they’ll do with our information.”